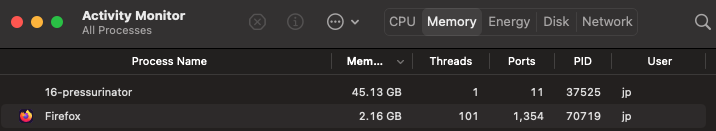

Recently at work I came across a problem where I had to go through a year’s worth of logs and corelate two different fields across all of our requests. On the good side, we have the logs stored as JSON objects (archived from Datadog which collects them). On the down side… it’s kind of a huge amount of data. Not as much as I’ve dealt with at previous jobs/in some academic problems, but we’re still talking on the order of terabytes.

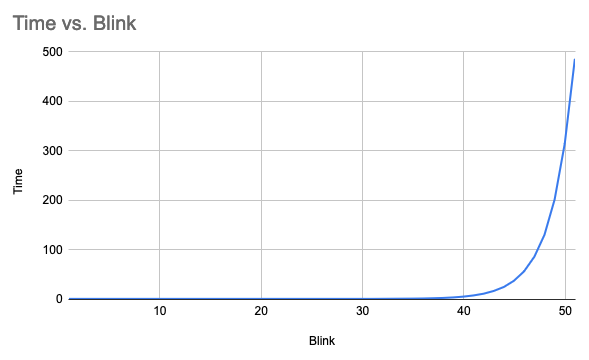

On one hand, write up a quick Python script, fire and forget. It takes maybe ten minutes to write the code and (for this specific example) half an hour to run it on the specific cloud instance the logs lived on. So we’ll start with that. But then I got thinking… Python is supposed to be super slow right? Can I do better?

(Note: This problem is mostly disk bound. So Python actually for the most part does just fine.)

read more...