Overview

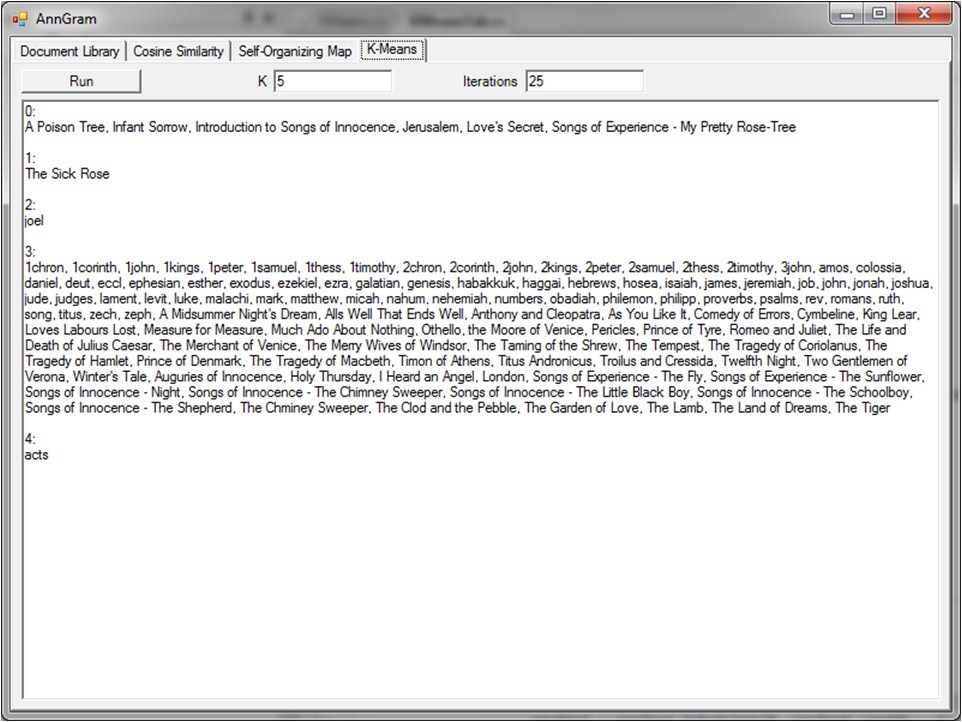

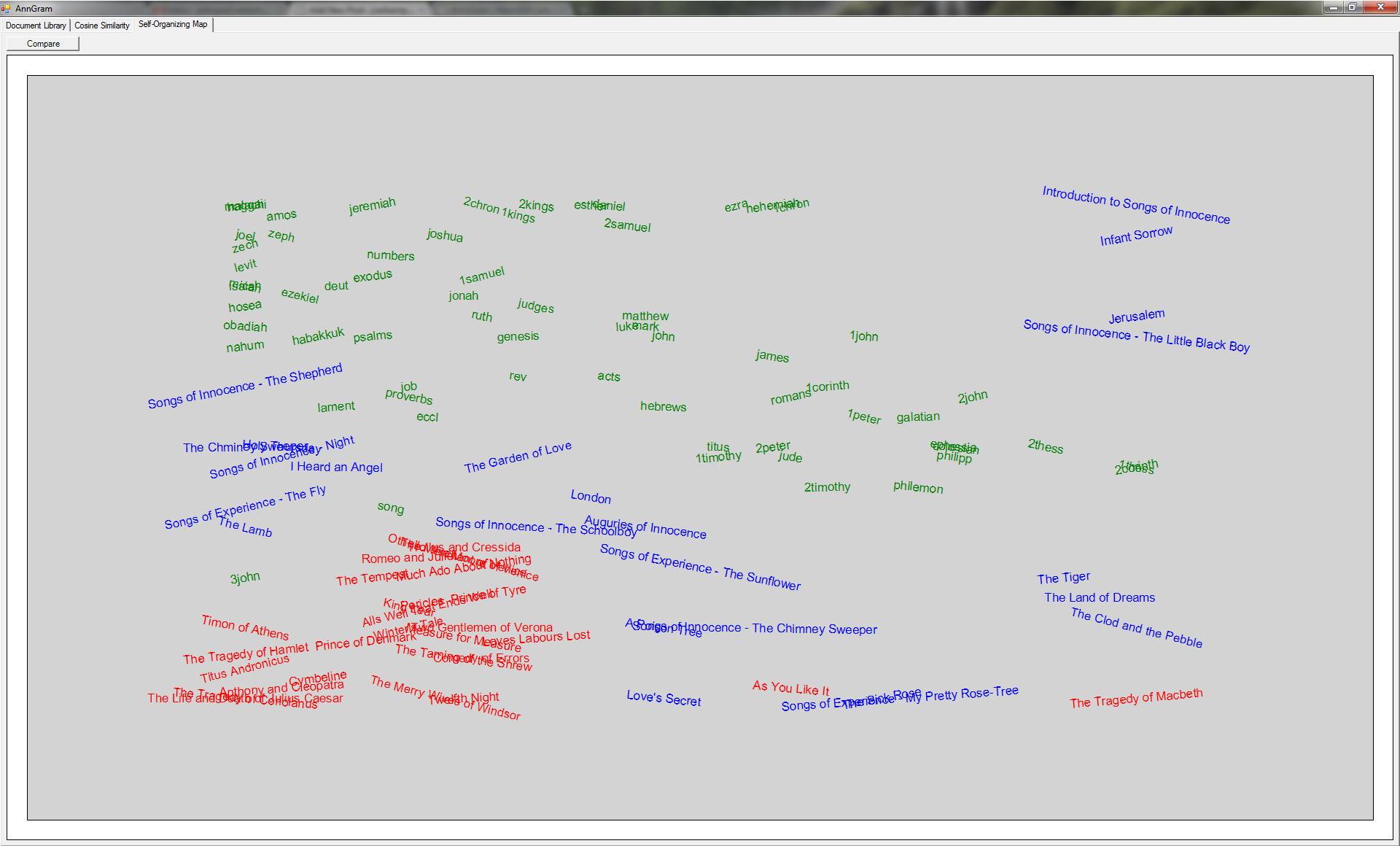

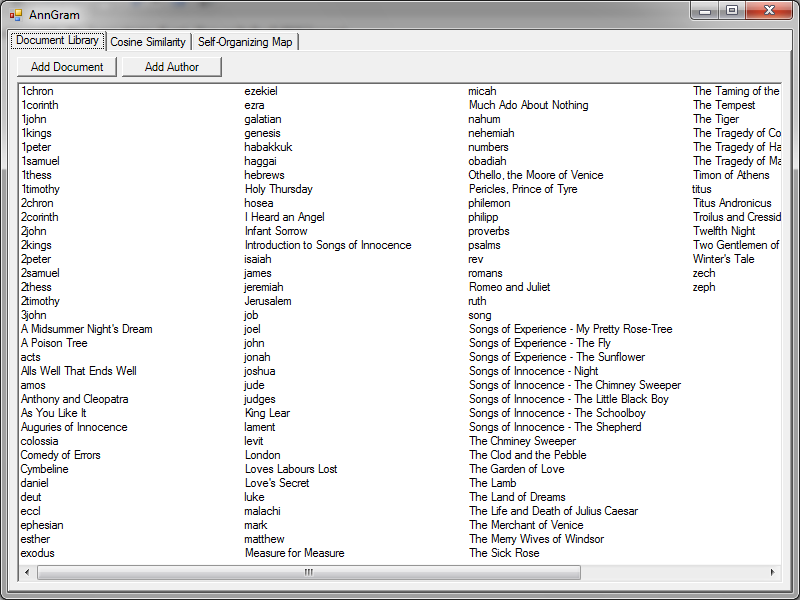

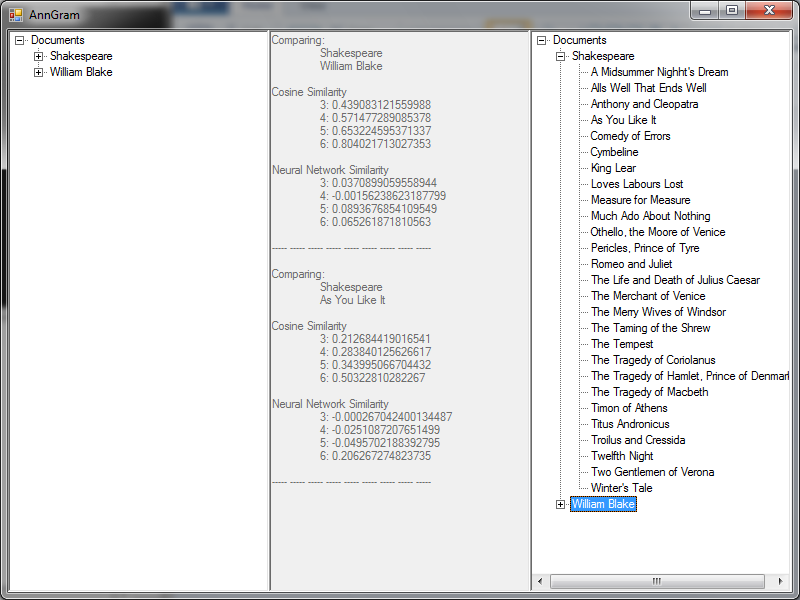

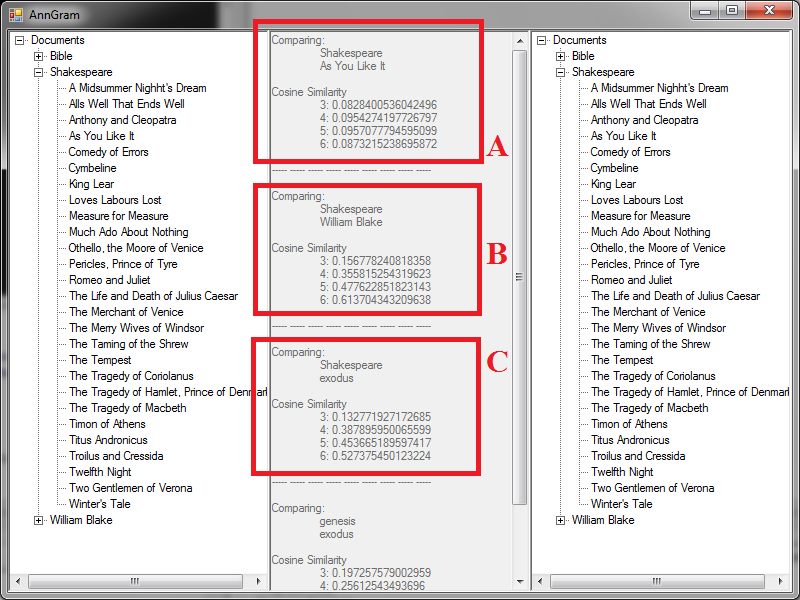

For another comparison, I’ve been looking for a way to replace the nGrams with another way of turning a document into a vector. Based on word frequency instead of nGrams, I’ve run a number of tests to see how the accuracy and speed of the algorithm compares for the two.

nGrams

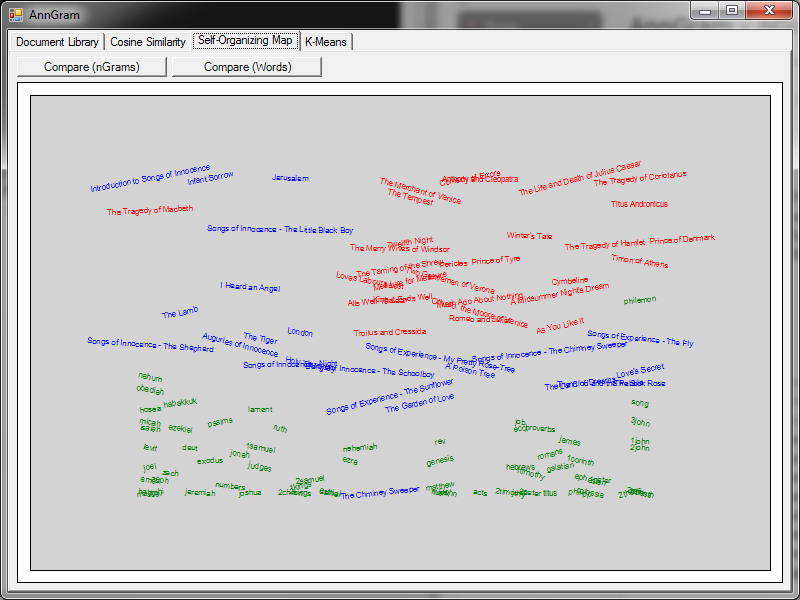

I still intend to look into why the Tragedy of Macbeth does not stay with the rest of Shakespeare’s plays. I still believe that it is because portions of it were possible written by another author.